In the evolving landscape of healthcare, Artificial Intelligence (AI) is playing a pivotal role. Foundation Models (FMs), which are large-scale and adaptable neural networks, have emerged as a promising solution to the inherent challenges in ultrasound imaging, such as high variability and intrinsic noise.

Introduction

Ultrasound imaging, a vital diagnostic tool, faces challenges including variability and intrinsic noise. While Meta AI's Foundation Model SAM (Segment Anything Model) has been effective with general imagery, it falls short in medical ultrasound contexts due to its limited training on such data. FMs are large-scale and adaptable neural networks trained on a broad range of data, enabling them to learn a wide spectrum of tasks. They serve as a base upon which specialized models can be built and fine-tuned for specific applications. GE HealthCare's recent research work⁹ is on an innovative SonoSAMTrack¹ model, specifically designed for ultrasound, to help enhance precision and consistency in diagnostics. SonoSAMTrack¹ combines a promptable foundational model for segmenting objects of interest on ultrasound images called SonoSAM¹, with a state-of-the-art contour tracking model to propagate segmentations on 2D+t and 3D ultrasound datasets. Using a broad dataset from GE HealthCare devices, SonoSAMTrack¹ was trained on approximately 200,000 ultrasound image-mask pairs. It excels in performance, achieving in tests an average similarity score of over 90%, a vital metric suggesting a high degree of precision in overlap between segmented and actual regions. Remarkably, this research has shown that with 2-6 user clicks, there is swift and precise segmentation. To further amplify capabilities, SonoSAMTrack¹ incorporates advanced tracking techniques. Initially, SonoSAMTrack¹ is designed to generate precise contours, and as subsequent frames are processed, a tracking algorithm propagates these contours, aiding in accuracy and continuity. This approach not only assists with automated precision but also allows user intervention for any necessary corrections. To cater to edge devices, a streamlined SonoSAMLite¹ is being studied. Using knowledge distillation, this compact model is designed to maintain near-par performance, making it ideal for real-time applications on resource-constrained devices. Collectively, these advancements underline the transformative potential of AI in refining medical imaging.

The power of Foundation Models

In the domain of healthcare, leveraging AI to enhance patient care, streamline operational efficiencies, and make informed decisions has become increasingly pivotal. Traditionally, the approach to integrating AI into healthcare systems necessitated the retraining of models to accommodate the unique requirements of different patient populations and hospital settings. This conventional method often led to heightened costs, complexity, and the need for specialized personnel, thereby hindering the broad adoption of AI technologies in healthcare domains.

In contrast, FMs, large-scale neural networks, have emerged as a reliable and adaptable foundation for developing AI applications tailored to the healthcare sector². These models have risen to prominence due to their ability to operate as human-in-the-loop AI systems, garnering significant attention. Their initial pre-training stage involves learning from extensive datasets, necessitating significant time and computational power. However, after this pre-training, the model becomes adaptable for reuse and fine-tuning across various subsequent tasks, to meet the diverse needs within healthcare³.

The robustness of FMs is showcased by their ability to adeptly handle a wide array of data types and structures typical in healthcare, such as patient records, imaging data, and genetic information, with high accuracy and consistency. Their adaptability is particularly evident when tasked with localizing models to cater to specific healthcare settings or patient cohorts² ⁴. FMs can be efficiently fine-tuned with a relatively sparse set of examples, removing the requirement of developing new models from the beginning for each task, thereby conserving both time and computational resources. Existing research⁵ ⁶ indicates, the fine-tuning of an already pre-trained model demands a smaller labeled dataset than what is needed for training a model from the ground up, leading to further reductions in data collection and annotation expenses. This feature could facilitate a swift and cost-effective adaptation process for localized implementations, marking a stark departure from the traditional model retraining approach.

Foundation Models: General-purpose AI to unlock a plethora of opportunities

FMs exhibit remarkable robustness and adaptability as AI models, positioning them as ready contenders to address a diverse array of tasks and challenges prevalent in healthcare. On one hand, the flexibility and strength of FMs can provide a strong foundation for developing AI applications tailored to healthcare. However, when delving into specialized tasks such as ultrasound imaging, certain FMs like SAM tend to falter. This limitation has catalyzed the evolution and development of more targeted models, which are designed to address specific challenges within the medical imaging field.

SonoSAMTrack¹

GE HealthCare's research project SonoSAMTrack¹ stands out with its tailored design for ultrasound imaging. Testing demonstrated increased performance metrics in our work, including:

- A Dice Similarity score⁷ surpassing 90%, indicating segmentation accuracy significantly greater than that of SAM.

- Quick and efficient operation with minimal user input, requiring just 2-6 clicks for precise segmentation.

SonoSAM's training on a varied dataset of approximately 200,000 ultrasound image-mask pairs scanned from GE HealthCare's array of devices will help to drive robust performance across various unseen datasets and surpassing competing methods substantially in all crucial metrics.

SonoSAMTrack¹ focuses on segmenting anatomies, lesions, and other essential areas in ultrasound images, despite the typical challenges such as artifacts, poor image quality, and diffused boundaries. Generic models like Meta AI’s SAM, despite being potent in various modalities, struggle with the peculiar characteristics of ultrasound images, such as ambiguous object boundaries and variable texture.

Example ultrasound images demonstrating diversity in regions of interest

We assess and present the performance of SonoSAMTrack¹ across 7 distinct datasets to test its generalization capabilities. These datasets span unseen anatomies such as Adult Heart and Fetal Head, as well as pathologies like breast lesions and musculoskeletal anomalies, and account for variability introduced by different ultrasound systems. Additionally, we incorporate public datasets sourced from various challenges to ensure comprehensive evaluation. SonoSAMTrack¹ demonstrates its pliability and applicability in ultrasound image segmentation, consistently delivering high-quality results over a wide range of demanding datasets and conditions.

Evaluating AI models on performance and diversity metrics

The distinct outcomes shown in this research when comparing generalist FMs like SAM with specialized ones such as SonoSAMTrack¹ highlight the progressive strides in AI for healthcare applications. This emphasizes the need for a balanced approach of general robustness and specialized precision in AI models to meet the complex requirements of the healthcare sector. The strategic combination of generalist and specialized AI models signifies a step forward towards a nuanced, effective use of AI in healthcare, which is critical for advancing medical imaging and other related areas.

Advanced tracking techniques

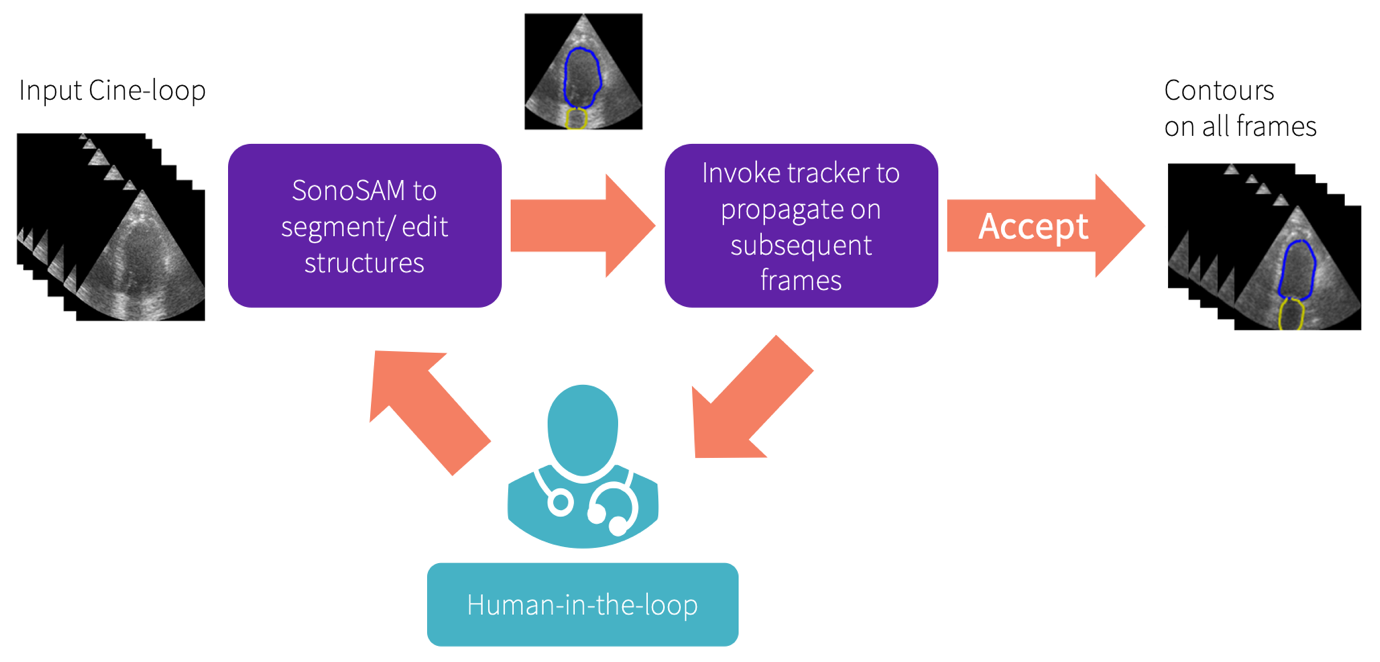

GE HealthCare is working to enhance the capabilities of SonoSAMTrack¹ to extend beyond basic segmentation, evolving into enhanced 2D+time and 3D dataset segmentation domains. The work shows advanced tracking techniques that facilitate real-time tracking and segmentation of moving objects within ultrasound images, like identifying and following tumors. It begins by delineating the object and continues to track it across subsequent frames. This feature could allow for precise analysis, with the added benefit of user intervention for on-the-fly corrections. Such tracking systems not only could help with automated precision but also empowers users to course correct, for optimal results.

SonoSAMTrack¹ workflow

SonoSAMLite¹

Real-time medical applications on edge devices require models that are both lightweight and powerful, balancing the need for computational efficiency with the demand for high performance in tasks like medical image analysis. To that end, GE HealthCare is researching so called “SonoSAMLite”, a streamlined version of the more complex model, SonoSAMTrack¹, that employs a technique called knowledge distillation⁸. This process involves teaching a smaller, 'student' model to replicate the behavior of a larger, 'teacher' model, thereby inheriting its capabilities but with less computational cost.

The ongoing research of on SonoSAMLite¹ involves a two-step enhancement process that ensures practicality across various platforms:

- Fine-tuning for optimal performance: Initially, SonoSAMLite¹ is designed to be fine-tuned, which means it can be adjusted and optimized to perform well for accurately analyzing ultrasound images. This stage is crucial for ensuring that the model delivers optimal performance.

- Refinement process for efficiency: Following fine-tuning, SonoSAMLite¹ will undergo a refinement process aimed at preserving its efficiency. This ensures that the model remains lightweight and does not require excessive computational resources, allowing it to operate on devices with limited processing capabilities. This step keeps the model lean and fast.

In this testing SonoSAMLite¹ was designed to surpass the limitations of existing Foundation Models, which often fall short due to extensive memory and computational demands stemming from their training on large image datasets. Although SonoSAMLite¹ might exhibit a slight dip in initial performance, it progressively improves, eventually achieving a Dice Similarity score closely resonating with SonoSAMTrack¹, post consistent interactions. For a more in-depth analysis, we invite you to explore the detailed findings in our paper⁹.

Conclusion

In this research, SonoSAMTrack¹ and its more compact counterpart, SonoSAMLite¹, mark innovative progress in applying artificial intelligence to ultrasound imaging technology. This research tackles prevalent issues like the high variability of imaging results and the intrinsic noise often encountered in ultrasound scans. Through pioneering research, these models have been refined to deliver high precision and efficiency in segmenting images, thanks to tracking and automated precision techniques. SonoSAMLite¹ is specifically engineered to deliver optimal real-time performance, even on devices with limited computational power, by utilizing the streamlined capabilities of the 'Lite' version. For healthcare professionals and institutions, these developments help envision a leap forward in diagnostic capabilities and patient care.

As we look to the future, the horizon seems promising, with AI continuing to reshape the landscape of medical imaging. There are substantial moves towards addressing the various complexities and challenges associated with AI, aligning with global efforts, and responding to industry calls for regulation. A heightened focus on regulation, security, and international coordination could shape the development and adoption of AI solutions in the healthcare industry.

Our vision is to accelerate advancements in medical imaging by introducing foundational AI technologies, thereby empowering providers, and data scientists to expedite AI application development and eventually help clinicians and enhance patient care. By utilizing these versatile, generalist models, we aim to adapt more efficiently to new tasks and medical imaging modalities, often requiring far less labeled data compared to the traditional model retraining approach. This is particularly significant in the healthcare domain, for which data is especially time-consuming and costly to obtain.

References

1. Technology in development that represents ongoing research and development efforts. These technologies are not products and may never become products. Not for sale. Not cleared or approved by the U.S. FDA or any other global regulator for commercial availability.

2. Moor, M., Banerjee, O., Abad, Z.S.H. et al. Foundation models for generalist medical artificial intelligence. Nature 616, 259–265 (2023). https://doi.org/10.1038/s41586-023-05881-4

3. Acosta, J.N., Falcone, G.J., Rajpurkar, P. et al. Multimodal biomedical AI. Nat Med 28, 1773–1784 (2022). https://doi.org/10.1038/s41591-022-01981-2

4. Med-PaLM: A large language model from Google Research, designed for the medical domain. https://sites.research.google/med-palm/

5. Yuhao Zhang, Hang Jiang, Yasuhide Miura, Christopher D. Manning, Curtis P. Langlotz. Contrastive Learning of Medical Visual Representations from Paired Images and Text. https://doi.org/10.48550/arXiv.2010.00747

6. Xu, Shawn, et al. ELIXR: Towards a general purpose X-ray artificial intelligence system through alignment of large language mdels and radiology vision encoders. https://doi.org/10.48550/arXiv.2308.01317

7. Sørensen–Dice coefficient. Wikipedia. https://en.wikipedia.org/wiki/S%C3%B8rensen%E2%80%93Dice_coefficient

8. Knowledge distillation. Wikipedia. https://en.wikipedia.org/wiki/Knowledge_distillation

9. Hariharan Ravishankar, Rohan Patil, Vikram Melapudi, Harsh Suthar, Stephan Anzengruber, Parminder Bhatia, Kass-Hout Taha, Pavan Annangi. SonoSAMTrack -- Segment and Track Anything on Ultrasound Images. https://doi.org/10.48550/arXiv.2310.16872